Science-Writing Intern, Johns Hopkins Institute for Basic Biomedical Sciences

This story idea is the first in a periodic series to feature technology and techniques that scientists use in biomedical research.

Tucked away in the back room of a Johns Hopkins research building, a computer hums quietly at a seemingly modest setup of a desktop and a pair of goggles.

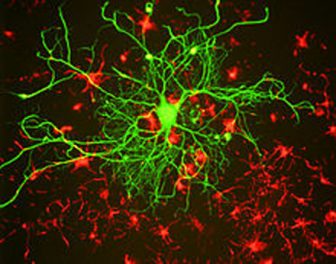

But the true magic is behind those goggles, which can immerse the viewer in a 3D interactive version of human anatomy. Looking at a brain slice in virtual reality is like “standing in a forest of neurons” says Megan Wood, PhD, the postdoctoral fellow at the Johns Hopkins University School of Medicine who has taken the lead on adapting virtual reality software for her lab’s use.

Stalks of neurons stretch throughout the field of view, the fibers traveling to other places in the brain to transmit messages. A kind of childish wonder comes with looking up and seeing a canopy of spindly neuron dendrites.

Wood works in the laboratory of Paul Fuchs, PhD who is the vice-chair for research and John E. Bordley Professor of Otolaryngology–Head and Neck Surgery at the Johns Hopkins University School of Medicine.

He studies the neuroscience of hearing. Wood and other trainees in the lab use the virtual reality system to immerse themselves in the delicate, spiral shell-shaped structure of the cochlea, a part of the ear that converts vibrations from sound into electrical impulses carried by nerves to the brain.

Instead of trying to flatten the curved structure of the cochlea into a 2D image that can be interpreted on a computer screen, virtual reality helps researchers get up close and personal with it. They can see the rows of sensory cells lined up around the structure, with neurons trailing beneath them. Colors on different cell types can be turned up and down, causing different parts of the specimen to light up around the viewer.

With virtual reality, it’s easier to visualize how the data exist in real-world dimensions, says Wood. Researchers can also navigate through the anatomy with hand-held controls, turn the structure on its side and look at it from angles that can be awkward to capture otherwise, says Wood. A “cutting” tool integrated into the software lets researchers make artificial slices in their virtual tissue samples.

The program can make some of research’s more tedious tasks, such as counting cells or tracing structures through the tissue, easier, not to mention more entertaining.

“You’re just climbing along them, tracing them,” says Wood. “That’s a totally different sensation. You’re so much a part of what you’re looking at.”

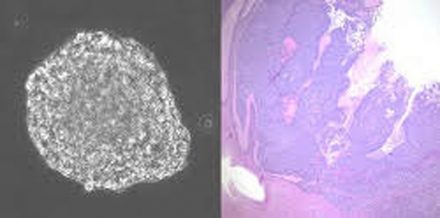

The virtual images are most often made by using confocal microscopy, an imaging technique commonly used in cellular biology research. The technique uses lasers that penetrate to precise depths in tissue samples and scan the tissue one layer at a time. This generates a series of images, typically in bright fluorescent colors. These images can be viewed layer by layer or pieced together into a 3D snapshot model.

The models can be displayed on a computer screen, without the virtual reality technology. However, some nuances are lost in translation into flat images, says Wood. It can be more difficult to fully understand spatial relationships and how structures curve toward or away from the viewer. In addition, she says, tissue and other cells can get in the way when researchers are trying to look at particular features.

“A flat image doesn’t tell you much about where those structures are in 3D space,” says Wood.

Virtual reality also has been a useful tool for teaching high school and undergraduate students in the lab, Wood says. Many of the ear’s anatomical structures are notoriously difficult to explain. But when the students don the virtual reality goggles, they see the 3D version of the structure, turn it and move through it. Then, it’s much easier to get an intuitive grasp of the anatomy.

The lab is also testing virtual reality images generated from electron microscopy data, another imaging technique that provides fine-grained pictures of tiny cellular structures. Unlike confocal microscopy, this technique requires the tissue to be physically sliced beforehand and then photographed one layer at a time.

Wood and her colleagues have used the virtual reality software to stack electron microscopy images together.

The software, called syGlass, is currently in the beta testing phase.

“It’s still an evolving product,” says Wood. “I’m glad that we’re getting in on it early, so we can help figure out how it’s best used.”

Click on link below to watch the video that accompanies this release. (Credit: Megan Wood)